The term Shadow AI is the artificial intelligence world’s equivalent to the well-known term Shadow IT.

Since the beginning of 2023 and the explosive arrival of ChatGPT, the use of AI solutions, especially Generative AI, has significantly increased.

Many employees in companies are utilizing Gen AI solutions for both personal and business purposes, including:

- Content creation and editing

- Code generation

- Process optimization

- Meeting summaries

- Image generation

- Presentation enhancements

Shadow AI refers to the unsanctioned and unauthorized use of AI tools, bypassing oversight from the organization’s IT and Cybersecurity teams.

According to a survey conducted by Microsoft in May 2024, approximately 75% of knowledge workers are currently using AI in the workplace.

What are the dangers of Shadow AI?

The unsupervised use of generative AI introduces several risks to organizational security and compliance. These include:

- Bypassing Security Protocols – AI tools may rephrase or summarize sensitive information (e.g., M&A details, patents, or proprietary projects), bypassing traditional data protection tools that primarily flag standard formats of sensitive data (e.g., names, healthcare information).

- Data Leakage – Inputs to AI tools are often used for training algorithms, potentially exposing sensitive information to developers and even the public.

- Non-Compliance with Privacy Regulations – Some tools provide privacy settings to prevent data from being used for algorithm training. However, using non-sanctioned tools increases the likelihood of unintentional data leakage.

- Low Awareness of Risks – Employees may inadvertently feed confidential information into AI tools due to a lack of awareness, increasing the risk of data exposure.

- Identity Hygiene Challenges – Many generative AI tools are accessed using personal (consumer-grade) credentials without multi-factor authentication (MFA), creating weak points vulnerable to credential theft and misuse.

- Visibility Challenges – Without proper tracking, organizations cannot fully identify which AI tools employees are using or the associated risks.

- Supply Chain Vulnerabilities – OAuth integrations often retain excessive access to sensitive systems like email, SharePoint, and OneDrive. Dormant or redundant integrations increase the risk of supply chain attacks.

During the research, we encountered several interesting data points.

The top 10 most common generative AI tools in organizations:

- ChatGPT

- OpenAI

- Bing Chat AI

- QuillBot

- Microsoft Copilot

- Google Gemini AI (Formerly Bard)

- Notion

- Poe

- Anthropic Claude

- Perplexity AI

GenAI Accounts are More Susceptible to Data Breaches

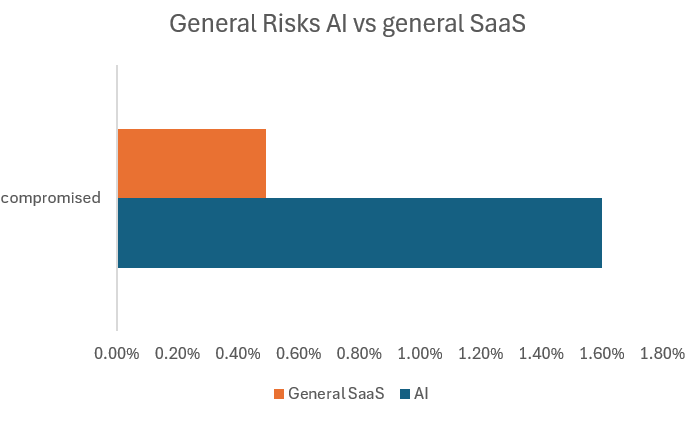

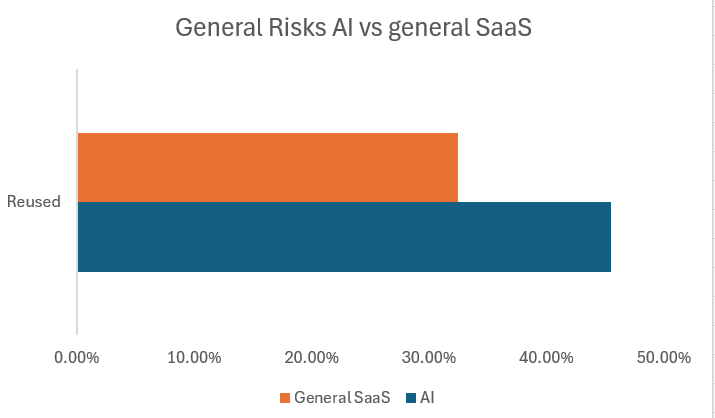

While comparing between normal SaaS apps and Gen-AI apps we find the following statistics, indicating that the GenAI accounts are more susceptible to data breaches:

- GenAI accounts are 3 times more likely to be compromised (meaning their credentials are available in the dark web)

- GenAI accounts passwords are reused more than other SaaS application accounts

- 92% of non-SSO accounts do not have MFA and therefore the importance of reused passwords might increase.

- Between 80% and 90% of Gen-AI apps are unmanaged and on average organizations use 65-75 gen-ai apps (not all are being accessed through login).

- 70% of AI apps are approved by Users and only 30% of those apps are approved by admins, when normal SaaS apps have been approved by an admin 63% of the times and only 37% of the times it had been approved by a user.

- 37% of the Gen-AI integrations are dormant (inactive for at least 3 months), and looking at the rest (other SaaS integrations) we see 31% of the integrations are dormant.

- Sensitive documentation accounts for 6.5% of all uploads on Gen-AI platforms.

How can we manage GenAI risk?

Effective management requires a combination of policies, technology, and education:

Educate Employees – Provide real-time guidance on AI usage to encourage compliance with policies while enabling productivity.

Develop Governance Policies – Establish clear guidelines on AI usage, including approved tools, data handling practices, and restrictions.

Map and Classify Data – Identify sensitive data, its location, and who has access to it, ensuring alignment with AI tool permissions.

Implement Access Controls – Restrict access to sensitive data, monitor attempts to interact with AI tools, and manage OAuth integrations to limit unnecessary access.

Monitor Technological Advancements – Stay ahead of emerging AI tools, vulnerabilities, and threats.

Framework for Shadow AI Risk Management

An Effective Framework for Shadow AI Risk Management should include these key characteristics.

Visibility

- Map all AI tools used across the organization, both managed and unmanaged.

- Track associated credentials (corporate and personal).

- Identify sensitive OAuth scopes.

Intelligence

- Develop risk models for AI tools and their associated credentials.

- Highlight high-risk tools and accounts for prioritization.

Prevention and Remediation

- Provide real-time usage guidance to employees.

- Mitigate credential risks and enforce MFA.

- Revoke access to unneeded OAuth scopes.

The Importance of SaaS Security

While GenAI tools pose unique challenges, SaaS security forms the foundation for mitigating broader risks across the organizational tech stack.

Key SaaS Security Risks

- Lack of SSO Enforcement: Non-SSO accounts increase exposure to credential theft and unauthorized access.

- Password Reuse and MFA Gaps: Employees reusing passwords across SaaS tools without MFA leave the organization one breach away from a catastrophic compromise.

Emerging Concern: Shadow AI

As Shadow AI amplifies existing SaaS risks, organizations must adapt their security strategies to address AI-enabled vulnerabilities.

As we can see, the use of Generative AI tools today increases the level of risk within an organization. In light of the findings from the research, organizations must emphasize Shadow AI and the nature of its usage.

It appears that in the SaaS world, there should be a focus on identity, understanding who the users are that consume AI services and the nature of their usage of these services.

Ultimately, in attack processes by potential attackers, beyond the use of vulnerable infrastructure or application vulnerabilities, some of the lateral movement processes involve the use of identities, weak credentials, and the use of compromised passwords. Therefore, emphasis should also be placed on the users.

In summary, understanding how users consume artificial intelligence services can provide valuable business insights into what is necessary and what is not. This can help protect against new attack surfaces and strengthen the security posture in this area. Shadow AI represents SaaS Security 2.0, but implementing protective tools for SaaS will ensure the security of the organization even for solutions that are not AI-based.

Today, many companies and products are attempting to integrate artificial intelligence capabilities into their offerings, making it crucial to protect all cloud-based solutions.